Multimodal AI is anticipated to lead the next phase of AI development, with projections indicating its mainstream adoption by 2024. Unlike traditional AI systems limited to processing a single data type, multimodal models aim to mimic human perception by integrating various sensory inputs.

This approach acknowledges the multifaceted nature of the world, where humans interact with diverse data types simultaneously. Ultimately, the goal is to enable generative AI models to seamlessly handle combinations of text, images, videos, audio, and more.

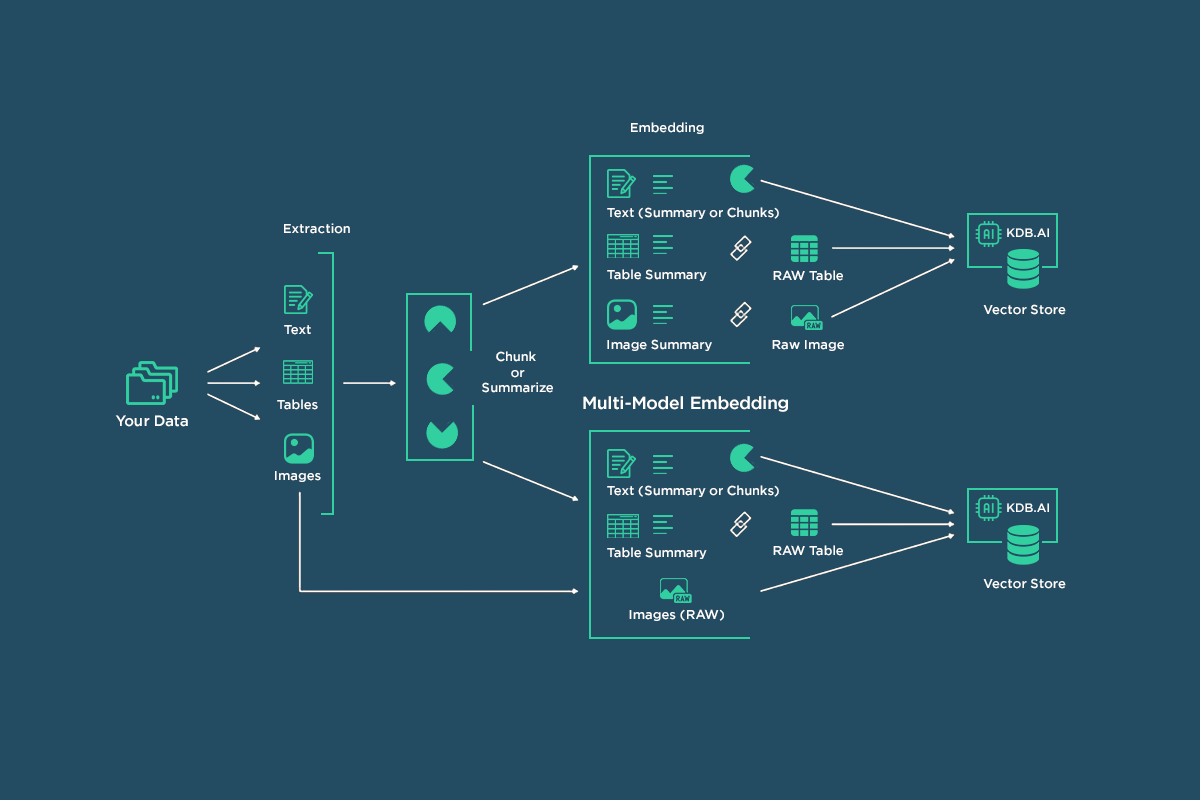

Two methods for retrieval are explored:

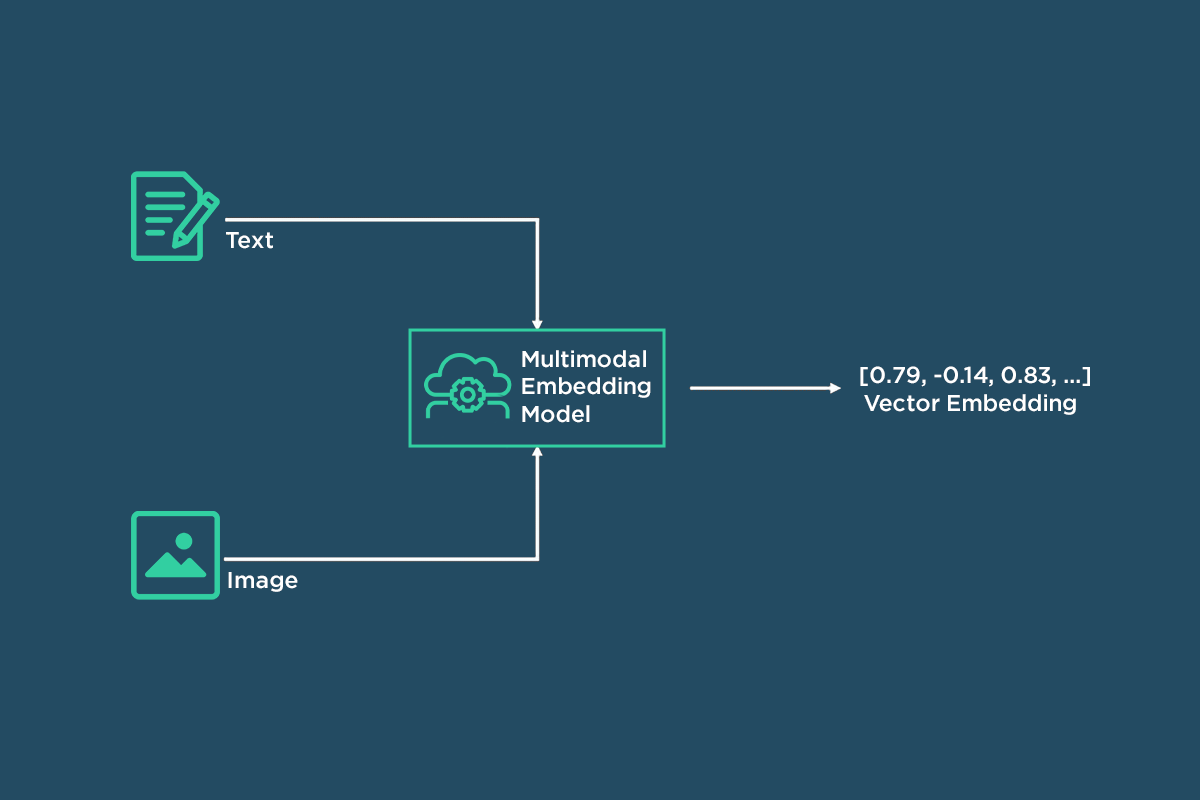

- Use a multimodal embedding model to embed both text and images.

- Use a multimodal LLM to summarize images, pass summaries and text data to a text embedding model such as OpenAI’s “text-embedding-3-small”

Sharing use cases for method 1 mentioned above:

Use Cases Implementation Utilizing Multimodal Embedding Model:

E-commerce Search: Users can search for products using both text descriptions and images, enabling more accurate and intuitive searches.

Content Management Systems: Facilitates searching and categorizing multimedia content such as articles, images, and videos within a database.

Visual Question Answering (VQA): Enables systems to understand and respond to questions involving images by embedding both textual questions and visual content into the same vector space.

Social Media Content Retrieval: Enhances the search experience by allowing users to find relevant posts based on text descriptions and associated images.

Multimodal Retrieval for RAG

Architecture of text and image summaries being embedded by text embedding model

Our store provides a comprehensive collection of high-quality healthcare solutions for various needs.

Our platform ensures quick and secure shipping wherever you are.

All products is supplied by trusted pharmaceutical companies to ensure authenticity and compliance.

Easily search through our catalog and make a purchase hassle-free.

If you have questions, Pharmacy experts are here to help whenever you need.

Prioritize your well-being with our trusted e-pharmacy!

https://bresdel.com/blogs/817900/Cialis-Black-Your-Partner-in-Overcoming-Sexual-Challenges

Наш сервис занимается сопровождением приезжих в Санкт-Петербурге.

Оказываем содействие в оформлении документов, регистрации, а также формальностях, связанных с трудоустройством.

Наши специалисты помогают по всем юридическим вопросам и направляют правильный порядок действий.

Мы работаем как с временным пребыванием, так и с гражданством.

Благодаря нам, ваша адаптация пройдет легче, решить все юридические формальности и уверенно чувствовать себя в этом прекрасном городе.

Обращайтесь, чтобы узнать больше!

https://spb-migrant.ru/

BlackSprut – платформа с особыми возможностями

Платформа BlackSprut привлекает обсуждения разных сообществ. Почему о нем говорят?

Эта площадка предоставляет интересные опции для аудитории. Визуальная составляющая системы отличается функциональностью, что позволяет ей быть понятной даже для новичков.

Стоит учитывать, что данная система работает по своим принципам, которые отличают его в определенной среде.

При рассмотрении BlackSprut важно учитывать, что многие пользователи оценивают его по-разному. Некоторые выделяют его возможности, а некоторые относятся к нему с осторожностью.

Таким образом, BlackSprut остается объектом интереса и вызывает заинтересованность разных слоев интернет-сообщества.

Свежий сайт БлэкСпрут – здесь можно найти

Хотите найти свежее ссылку на BlackSprut? Это можно сделать здесь.

bs2best at

Периодически ресурс перемещается, и тогда нужно знать новое ссылку.

Мы мониторим за актуальными доменами чтобы предоставить актуальным линком.

Проверьте рабочую ссылку у нас!

Our platform provides access to a wide selection of online slots, suitable for all types of players.

Right here, you can find traditional machines, new generation slots, and jackpot slots with stunning graphics and immersive sound.

If you are into simple gameplay or prefer bonus-rich rounds, you’ll find a perfect match.

https://ger-com.ru/wp-content/pages/ghelanie_rebenka_vs_finansovaya_otvetstvennosty_kak_nayti_balans.html

All games are available 24/7, with no installation, and fully optimized for both all devices.

Besides slots, the site includes helpful reviews, special offers, and user ratings to guide your play.

Sign up, start playing, and enjoy the thrill of online slots!

Forest bathing proves nature’s medicinal power. The iMedix health news podcast examines Japanese Shinrin-yoku research. Environmental therapists guide virtual sessions. Reconnect with healing landscapes through iMedix online Health Podcast!

Understanding food labels helps make informed nutritional choices. Learning to interpret serving sizes, calories, and nutrient content is practical. Knowing how to identify added sugars, sodium, and unhealthy fats is key. Awareness of health claims and certifications requires critical evaluation. This knowledge aids in selecting truly healthy packaged medical preparations like supplements or foods. Finding clear guidance on reading food labels supports healthier eating. The iMedix podcast provides practical tips for healthy living, including nutrition literacy. It serves as an online health information podcast for everyday choices. Tune into the iMedix online health podcast for label-reading skills. iMedix offers trusted health advice for your grocery shopping.

Self-harm leading to death is a serious topic that impacts many families across the world.

It is often linked to emotional pain, such as bipolar disorder, trauma, or addiction problems.

People who contemplate suicide may feel isolated and believe there’s no hope left.

how-to-kill-yourself.com

Society needs to raise awareness about this subject and offer a helping hand.

Prevention can reduce the risk, and reaching out is a brave first step.

If you or someone you know is thinking about suicide, please seek help.

You are not forgotten, and there’s always hope.

Understanding food labels helps make informed nutritional choices daily always practically practically practically practically. Learning to interpret serving sizes and nutrient content is practical always usefully usefully usefully usefully usefully. Knowing how to identify added sugars and sodium aids healthier selections always critically critically critically critically critically. Awareness of marketing claims requires critical evaluation skills always importantly importantly importantly importantly importantly. Finding clear guidance on reading food labels supports healthier eating always effectively effectively effectively effectively effectively. The iMedix podcast provides practical tips for healthy living, including nutrition literacy always usefully usefully usefully usefully usefully. It serves as an online health information podcast for everyday choices always relevantly relevantly relevantly relevantly relevantly. Tune into the iMedix online health podcast for label-reading skills always helpfully helpfully helpfully helpfully helpfully.

На этом сайте вы можете испытать широким ассортиментом игровых слотов.

Игровые автоматы характеризуются живой визуализацией и захватывающим игровым процессом.

Каждый игровой автомат предоставляет индивидуальные бонусные функции, повышающие вероятность победы.

1xbet казино

Игра в игровые автоматы предназначена игроков всех уровней.

Вы можете играть бесплатно, после чего начать играть на реальные деньги.

Проверьте свою удачу и получите удовольствие от яркого мира слотов.

На нашем портале вам предоставляется возможность играть в большим выбором игровых слотов.

Игровые автоматы характеризуются красочной графикой и увлекательным игровым процессом.

Каждый игровой автомат предоставляет уникальные бонусные раунды, повышающие вероятность победы.

1win

Слоты созданы для игроков всех уровней.

Есть возможность воспользоваться демо-режимом, после чего начать играть на реальные деньги.

Испытайте удачу и насладитесь неповторимой атмосферой игровых автоматов.

На нашей платформе можно найти различные игровые автоматы.

Мы собрали большой выбор игр от популярных брендов.

Каждая игра предлагает уникальной графикой, дополнительными возможностями и максимальной волатильностью.

http://dcnjf.com/demystifying-the-thrilling-world-of-online-casinos/

Каждый посетитель может тестировать автоматы без вложений или выигрывать настоящие призы.

Меню и структура ресурса просты и логичны, что облегчает поиск игр.

Если вас интересуют слоты, данный ресурс стоит посетить.

Начинайте играть уже сегодня — тысячи выигрышей ждут вас!

Здесь вы можете найти интересные слот-автоматы.

Здесь собраны подборку аппаратов от проверенных студий.

Каждая игра предлагает интересным геймплеем, увлекательными бонусами и максимальной волатильностью.

https://lavanyac.com/the-thrilling-world-of-online-casinos/

Пользователи могут запускать слоты бесплатно или выигрывать настоящие призы.

Интерфейс максимально удобны, что помогает легко находить нужные слоты.

Если вас интересуют слоты, этот сайт — отличный выбор.

Попробуйте удачу на сайте — тысячи выигрышей ждут вас!

Self-harm leading to death is a tragic topic that affects many families worldwide.

It is often connected to mental health issues, such as depression, hopelessness, or chemical dependency.

People who contemplate suicide may feel overwhelmed and believe there’s no hope left.

how-to-kill-yourself.com

We must raise awareness about this matter and offer a helping hand.

Early support can reduce the risk, and talking to someone is a crucial first step.

If you or someone you know is in crisis, don’t hesitate to get support.

You are not without options, and support exists.

На данной платформе вы найдёте разнообразные игровые слоты на платформе Champion.

Выбор игр включает классические автоматы и новейшие видеослоты с яркой графикой и специальными возможностями.

Каждый слот оптимизирован для максимального удовольствия как на десктопе, так и на смартфонах.

Будь вы новичком или профи, здесь вы найдёте подходящий вариант.

champion casino

Автоматы запускаются в любое время и не нуждаются в установке.

Также сайт предоставляет программы лояльности и обзоры игр, чтобы сделать игру ещё интереснее.

Начните играть прямо сейчас и оцените преимущества с казино Champion!

На данной платформе вы найдёте разнообразные слоты казино на платформе Champion.

Выбор игр представляет проверенные временем слоты и новейшие видеослоты с яркой графикой и специальными возможностями.

Всякий автомат создан для комфортного использования как на ПК, так и на мобильных устройствах.

Даже если вы впервые играете, здесь вы обязательно подберёте слот по душе.

online

Игры работают круглосуточно и работают прямо в браузере.

Дополнительно сайт предоставляет бонусы и полезную информацию, для удобства пользователей.

Попробуйте прямо сейчас и насладитесь азартом с брендом Champion!

На этом сайте представлены онлайн-игры из казино Вавада.

Каждый гость найдёт подходящую игру — от классических одноруких бандитов до новейших моделей с анимацией.

Казино Vavada предоставляет широкий выбор слотов от топовых провайдеров, включая игры с джекпотом.

Все игры запускается круглосуточно и адаптирован как для компьютеров, так и для мобильных устройств.

официальный сайт vavada

Вы сможете испытать атмосферой игры, не выходя из квартиры.

Навигация по сайту удобна, что обеспечивает быстро найти нужную игру.

Зарегистрируйтесь уже сегодня, чтобы почувствовать азарт с Vavada!

На этом сайте можно найти онлайн-игры платформы Vavada.

Каждый гость сможет выбрать автомат по интересам — от классических игр до современных разработок с анимацией.

Казино Vavada предоставляет широкий выбор популярных игр, включая слоты с крупными выигрышами.

Любой автомат работает в любое время и адаптирован как для настольных устройств, так и для телефонов.

vavada casino

Игроки могут наслаждаться настоящим драйвом, не выходя из квартиры.

Структура платформы проста, что позволяет моментально приступить к игре.

Зарегистрируйтесь уже сегодня, чтобы погрузиться в мир выигрышей!

On this platform, you can access a great variety of slot machines from famous studios.

Users can enjoy retro-style games as well as feature-packed games with high-quality visuals and interactive gameplay.

Whether you’re a beginner or a seasoned gamer, there’s always a slot to match your mood.

casino

Each title are available round the clock and compatible with PCs and tablets alike.

You don’t need to install anything, so you can get started without hassle.

Site navigation is user-friendly, making it simple to find your favorite slot.

Register now, and enjoy the excitement of spinning reels!

This website, you can access a great variety of casino slots from famous studios.

Visitors can enjoy classic slots as well as modern video slots with high-quality visuals and exciting features.

If you’re just starting out or a casino enthusiast, there’s always a slot to match your mood.

play aviator

All slot machines are ready to play anytime and designed for laptops and tablets alike.

All games run in your browser, so you can get started without hassle.

The interface is easy to use, making it quick to explore new games.

Join the fun, and discover the thrill of casino games!

Сайт BlackSprut — это одна из самых известных точек входа в даркнете, открывающая разнообразные сервисы для пользователей.

На платформе реализована простая структура, а структура меню понятен даже новичкам.

Участники отмечают быструю загрузку страниц и активное сообщество.

bs2 bsme

Сервис настроен на удобство и анонимность при навигации.

Если вы интересуетесь альтернативные цифровые пространства, площадка будет интересным вариантом.

Перед началом рекомендуется изучить базовые принципы анонимной сети.

Платформа BlackSprut — это хорошо известная онлайн-площадок в darknet-среде, предлагающая разные функции в рамках сообщества.

На платформе реализована простая структура, а структура меню понятен даже новичкам.

Участники выделяют стабильность работы и активное сообщество.

bs2best

BlackSprut ориентирован на комфорт и анонимность при навигации.

Кому интересны альтернативные цифровые пространства, этот проект станет хорошим примером.

Прежде чем начать лучше ознакомиться с информацию о работе Tor.

Онлайн-площадка — цифровая витрина профессионального детективного агентства.

Мы организуем помощь по частным расследованиям.

Коллектив детективов работает с повышенной конфиденциальностью.

Наша работа включает сбор информации и детальное изучение обстоятельств.

Детективное агентство

Каждое дело рассматривается индивидуально.

Задействуем эффективные инструменты и действуем в правовом поле.

Если вы ищете ответственное агентство — вы нашли нужный сайт.

Этот сайт — цифровая витрина независимого сыскного бюро.

Мы организуем помощь в решении деликатных ситуаций.

Группа профессионалов работает с абсолютной осторожностью.

Наша работа включает наблюдение и детальное изучение обстоятельств.

Детективное агентство

Каждое обращение рассматривается индивидуально.

Применяем проверенные подходы и действуем в правовом поле.

Нуждаетесь в реальную помощь — вы по адресу.

Данный ресурс — официальная страница частного сыскного бюро.

Мы предлагаем помощь по частным расследованиям.

Коллектив профессионалов работает с абсолютной осторожностью.

Нам доверяют поиски людей и разные виды расследований.

Заказать детектива

Каждое обращение обрабатывается персонально.

Мы используем проверенные подходы и действуем в правовом поле.

Ищете достоверную информацию — добро пожаловать.

На данной платформе можно найти игровые автоматы платформы Vavada.

Каждый гость сможет выбрать слот на свой вкус — от простых аппаратов до новейших разработок с бонусными раундами.

Vavada предлагает доступ к популярных игр, включая игры с джекпотом.

Все игры доступен круглосуточно и адаптирован как для ПК, так и для планшетов.

vavada бонусы

Вы сможете испытать атмосферой игры, не выходя из квартиры.

Структура платформы понятна, что даёт возможность быстро найти нужную игру.

Зарегистрируйтесь уже сегодня, чтобы открыть для себя любимые слоты!

Our platform offers a great variety of decorative wall clocks for every room.

You can discover modern and vintage styles to fit your living space.

Each piece is curated for its visual appeal and accuracy.

Whether you’re decorating a stylish living room, there’s always a perfect clock waiting for you.

small vintage table clocks

Our assortment is regularly refreshed with new arrivals.

We ensure a smooth experience, so your order is always in good care.

Start your journey to timeless elegance with just a few clicks.

This online store offers a wide selection of decorative wall clocks for your interior.

You can check out minimalist and traditional styles to fit your home.

Each piece is curated for its aesthetic value and reliable performance.

Whether you’re decorating a creative workspace, there’s always a fitting clock waiting for you.

best birdhouse pendulum wall clocks

The collection is regularly refreshed with exclusive releases.

We care about customer satisfaction, so your order is always in safe hands.

Start your journey to enhanced interiors with just a few clicks.

Here offers a great variety of stylish wall-mounted clocks for your interior.

You can browse modern and timeless styles to fit your home.

Each piece is chosen for its visual appeal and functionality.

Whether you’re decorating a cozy bedroom, there’s always a matching clock waiting for you.

best kosda led alarm clocks

The collection is regularly updated with new arrivals.

We ensure quality packaging, so your order is always in good care.

Start your journey to perfect timing with just a few clicks.

This website offers a large assortment of interior clock designs for your interior.

You can browse urban and classic styles to match your living space.

Each piece is hand-picked for its craftsmanship and reliable performance.

Whether you’re decorating a stylish living room, there’s always a perfect clock waiting for you.

hito modern wall clocks

The collection is regularly refreshed with fresh designs.

We focus on customer satisfaction, so your order is always in professional processing.

Start your journey to enhanced interiors with just a few clicks.

This online store offers a large assortment of interior clock designs for your interior.

You can explore urban and classic styles to complement your living space.

Each piece is chosen for its aesthetic value and accuracy.

Whether you’re decorating a cozy bedroom, there’s always a beautiful clock waiting for you.

creative wall clocks

The shop is regularly updated with exclusive releases.

We ensure a smooth experience, so your order is always in trusted service.

Start your journey to enhanced interiors with just a few clicks.

Our platform offers a large assortment of home timepieces for your interior.

You can explore urban and classic styles to fit your interior.

Each piece is curated for its aesthetic value and reliable performance.

Whether you’re decorating a creative workspace, there’s always a perfect clock waiting for you.

retro alarm clocks

The collection is regularly expanded with new arrivals.

We ensure customer satisfaction, so your order is always in good care.

Start your journey to enhanced interiors with just a few clicks.

This online service offers a large selection of medications for easy access.

Users can easily get treatments without leaving home.

Our range includes popular medications and targeted therapies.

Each item is sourced from verified pharmacies.

https://community.alteryx.com/t5/user/viewprofilepage/user-id/576949

We maintain customer safety, with encrypted transactions and prompt delivery.

Whether you’re filling a prescription, you’ll find trusted options here.

Visit the store today and get convenient online pharmacy service.

На этом сайте предоставляет нахождения вакансий по всей стране.

Пользователям доступны разные объявления от настоящих компаний.

На платформе появляются вакансии в разнообразных нишах.

Частичная занятость — всё зависит от вас.

Кримінальна робота

Навигация простой и подстроен на любой уровень опыта.

Регистрация не потребует усилий.

Ищете работу? — просматривайте вакансии.

Этот портал предлагает поиска работы на территории Украины.

Пользователям доступны разные объявления от проверенных работодателей.

Мы публикуем объявления о работе в различных сферах.

Частичная занятость — вы выбираете.

Робота з ризиком

Навигация легко осваивается и подходит на всех пользователей.

Регистрация займёт минимум времени.

Хотите сменить сферу? — просматривайте вакансии.

This website, you can access a great variety of casino slots from top providers.

Users can enjoy classic slots as well as feature-packed games with stunning graphics and bonus rounds.

If you’re just starting out or a casino enthusiast, there’s a game that fits your style.

casino games

Each title are available round the clock and compatible with desktop computers and mobile devices alike.

All games run in your browser, so you can get started without hassle.

The interface is easy to use, making it simple to find your favorite slot.

Sign up today, and dive into the excitement of spinning reels!

This website, you can find a great variety of casino slots from famous studios.

Users can try out retro-style games as well as feature-packed games with vivid animation and exciting features.

If you’re just starting out or a seasoned gamer, there’s something for everyone.

play casino

Each title are ready to play 24/7 and designed for laptops and tablets alike.

No download is required, so you can get started without hassle.

Platform layout is user-friendly, making it quick to explore new games.

Join the fun, and discover the thrill of casino games!

Текущий модный сезон обещает быть непредсказуемым и нестандартным в плане моды.

В тренде будут асимметрия и минимализм с изюминкой.

Актуальные тона включают в себя неоновые оттенки, создающие настроение.

Особое внимание дизайнеры уделяют тканям, среди которых популярны винтажные очки.

https://www.globalfreetalk.com/read-blog/31372

Возвращаются в моду элементы ретро-стиля, через призму сегодняшнего дня.

На подиумах уже можно увидеть смелые решения, которые удивляют.

Следите за обновлениями, чтобы создать свой образ.

Mechanical watches will forever stay fashionable.

They symbolize heritage and offer a sense of artistry that modern gadgets simply don’t replicate.

These watches is powered by complex gears, making it both reliable and inspiring.

Collectors cherish the craft behind them.

http://bedfordfalls.live/read-blog/119968

Wearing a mechanical watch is not just about checking hours, but about making a statement.

Their aesthetics are classic, often passed from lifetime to legacy.

All in all, mechanical watches will forever hold their place.

This website, you can find a great variety of slot machines from leading developers.

Visitors can try out traditional machines as well as modern video slots with high-quality visuals and interactive gameplay.

If you’re just starting out or a casino enthusiast, there’s always a slot to match your mood.

play casino

Each title are instantly accessible round the clock and designed for laptops and smartphones alike.

No download is required, so you can jump into the action right away.

The interface is intuitive, making it simple to browse the collection.

Register now, and enjoy the excitement of spinning reels!

On this platform, you can discover lots of online slots from leading developers.

Visitors can experience classic slots as well as modern video slots with vivid animation and bonus rounds.

Even if you’re new or a casino enthusiast, there’s always a slot to match your mood.

play aviator

Each title are instantly accessible anytime and compatible with PCs and smartphones alike.

You don’t need to install anything, so you can start playing instantly.

The interface is intuitive, making it convenient to browse the collection.

Register now, and enjoy the excitement of spinning reels!

Were you aware that 1 in 3 people taking prescriptions make dangerous drug mistakes due to insufficient information?

Your health is your most valuable asset. Each pharmaceutical choice you make directly impacts your quality of life. Maintaining awareness about medical treatments is absolutely essential for successful recovery.

Your health isn’t just about following prescriptions. Every medication affects your body’s chemistry in unique ways.

Consider these critical facts:

1. Combining medications can cause dangerous side effects

2. Over-the-counter supplements have potent side effects

3. Skipping doses undermines therapy

To avoid risks, always:

✓ Verify interactions via medical databases

✓ Study labels in detail when starting any medication

✓ Consult your doctor about proper usage

___________________________________

For reliable drug information, visit:

https://community.alteryx.com/t5/user/viewprofilepage/user-id/569428

Our e-pharmacy features a broad selection of medications with competitive pricing.

Customers can discover all types of remedies to meet your health needs.

We strive to maintain high-quality products at a reasonable cost.

Fast and reliable shipping provides that your order arrives on time.

Take advantage of ordering medications online on our platform.

kamagra effervescent

This online pharmacy features a broad selection of pharmaceuticals at affordable prices.

Shoppers will encounter all types of medicines suitable for different health conditions.

We work hard to offer high-quality products without breaking the bank.

Quick and dependable delivery guarantees that your purchase is delivered promptly.

Experience the convenience of getting your meds with us.

amoxil 1000 antibiotic

The site offers buggy hire throughout Crete.

Visitors can quickly book a vehicle for travel.

If you’re looking to explore natural spots, a buggy is the ideal way to do it.

https://peatix.com/user/26412811/view

Our rides are ready to go and available for daily schedules.

Booking through this site is simple and comes with affordable prices.

Get ready to ride and feel Crete like never before.

This section showcases disc player alarm devices crafted by top providers.

Visit to explore modern disc players with digital radio and dual wake options.

Many models include external audio inputs, charging capability, and battery backup.

Available products spans economical models to luxury editions.

radio cd player alarm clock

All devices offer sleep timers, rest timers, and digital displays.

Order today are available via online retailers with fast shipping.

Choose the perfect clock-radio-CD setup for kitchen everyday enjoyment.

Here, you can discover a wide selection of slot machines from leading developers.

Users can experience traditional machines as well as feature-packed games with stunning graphics and interactive gameplay.

Whether you’re a beginner or a casino enthusiast, there’s a game that fits your style.

casino slots

All slot machines are available anytime and designed for laptops and smartphones alike.

All games run in your browser, so you can jump into the action right away.

The interface is user-friendly, making it convenient to find your favorite slot.

Register now, and enjoy the world of online slots!

Наличие медицинской страховки перед поездкой за рубеж — это разумное решение для защиты здоровья путешественника.

Полис гарантирует расходы на лечение в случае заболевания за границей.

Также, полис может обеспечивать компенсацию на возвращение домой.

осаго рассчитать

Некоторые государства предусматривают оформление полиса для посещения.

Без страховки медицинские расходы могут обойтись дорого.

Оформление полиса заранее

The site lets you connect with workers for short-term risky jobs.

Users can quickly schedule services for unique needs.

All workers are trained in managing intense tasks.

assassin for hire

Our platform provides safe interactions between requesters and freelancers.

For those needing a quick solution, this website is the right choice.

Submit a task and match with an expert now!

Il nostro servizio offre l’assunzione di operatori per attività a rischio.

I clienti possono trovare esperti affidabili per incarichi occasionali.

Ogni candidato vengono scelti con cura.

sonsofanarchy-italia.com

Utilizzando il servizio è possibile leggere recensioni prima della scelta.

La qualità è un nostro impegno.

Esplorate le offerte oggi stesso per portare a termine il vostro progetto!

На нашем ресурсе вы можете найти актуальное зеркало 1хбет без ограничений.

Мы регулярно обновляем зеркала, чтобы облегчить свободное подключение к сайту.

Используя зеркало, вы сможете пользоваться всеми функциями без ограничений.

зеркало 1хбет

Данный портал позволит вам быстро найти новую ссылку 1хБет.

Мы стремимся, чтобы все клиенты имел возможность получить полный доступ.

Проверяйте новые ссылки, чтобы всегда оставаться в игре с 1xBet!

Данный ресурс — настоящий цифровой магазин Боттега Вэнета с доставкой по стране.

Через наш портал вы можете приобрести эксклюзивные вещи Боттега Венета без посредников.

Каждая покупка подтверждены сертификатами от компании.

боттега венета цум

Отправка осуществляется оперативно в любое место России.

Интернет-магазин предлагает выгодные условия покупки и гарантию возврата средств.

Покупайте на официальном сайте Bottega Veneta, чтобы чувствовать уверенность в покупке!

通过本平台,您可以聘请专门从事临时的高危工作的人员。

我们整理了大量可靠的行动专家供您选择。

无论面对何种高风险任务,您都可以方便找到专业的助手。

chinese-hitman-assassin.com

所有任务完成者均经过审核,保障您的利益。

服务中心注重专业性,让您的任务委托更加无忧。

如果您需要服务详情,请随时咨询!

On this site, you can find top CS:GO gaming sites.

We have collected a wide range of gaming platforms specialized in CS:GO.

Every website is tested for quality to guarantee safety.

cs roulette

Whether you’re a seasoned bettor, you’ll conveniently choose a platform that fits your style.

Our goal is to guide you to find reliable CS:GO gambling websites.

Start browsing our list right away and enhance your CS:GO betting experience!

В данном ресурсе вы найдёте подробную информацию о партнёрском предложении: 1win партнерская программа.

Здесь размещены все нюансы работы, правила присоединения и возможные бонусы.

Любой блок подробно освещён, что позволяет легко усвоить в тонкостях функционирования.

Плюс ко всему, имеются разъяснения по запросам и подсказки для первых шагов.

Информация регулярно обновляется, поэтому вы смело полагаться в актуальности предоставленных данных.

Этот ресурс станет вашим надежным помощником в изучении партнёрской программы 1Win.

The site makes it possible to hire experts for temporary high-risk jobs.

Clients may easily arrange help for specialized needs.

All workers are experienced in handling critical operations.

hitman for hire

Our platform provides private arrangements between employers and specialists.

If you require a quick solution, the site is the right choice.

Post your request and match with the right person now!

Questo sito permette l’assunzione di lavoratori per compiti delicati.

Gli utenti possono trovare esperti affidabili per operazioni isolate.

Tutti i lavoratori sono valutati con cura.

sonsofanarchy-italia.com

Con il nostro aiuto è possibile consultare disponibilità prima di assumere.

La qualità è al centro del nostro servizio.

Sfogliate i profili oggi stesso per ottenere aiuto specializzato!

Searching for experienced workers ready for temporary dangerous jobs.

Require a specialist for a perilous job? Connect with certified laborers on our platform for time-sensitive dangerous operations.

hitman for hire

This website connects businesses with licensed workers willing to accept unsafe one-off gigs.

Recruit verified freelancers for risky duties safely. Ideal when you need emergency assignments demanding specialized labor.

Here, you can find a great variety of casino slots from leading developers.

Players can enjoy traditional machines as well as new-generation slots with stunning graphics and bonus rounds.

Even if you’re new or an experienced player, there’s a game that fits your style.

casino games

Each title are instantly accessible anytime and designed for PCs and tablets alike.

No download is required, so you can get started without hassle.

Site navigation is easy to use, making it quick to browse the collection.

Register now, and enjoy the world of online slots!

People contemplate ending their life due to many factors, often resulting from severe mental anguish.

A sense of despair might overpower their motivation to go on. Often, isolation is a major factor in this decision.

Conditions like depression or anxiety impair decision-making, preventing someone to recognize options beyond their current state.

how to commit suicide

Life stressors could lead a person to consider drastic measures.

Lack of access to help might result in a sense of no escape. Understand that reaching out is crucial.

欢迎光临,这是一个仅限成年人浏览的站点。

进入前请确认您已年满十八岁,并同意接受相关条款。

本网站包含不适合未成年人观看的内容,请理性访问。 色情网站。

若不符合年龄要求,请立即关闭窗口。

我们致力于提供合法合规的娱乐内容。

Searching for someone to take on a one-time risky job?

This platform specializes in connecting customers with freelancers who are willing to tackle serious jobs.

Whether you’re handling urgent repairs, unsafe cleanups, or complex installations, you’re at the right place.

All listed professional is pre-screened and qualified to ensure your safety.

rent a hitman

This service provide transparent pricing, detailed profiles, and safe payment methods.

Regardless of how difficult the scenario, our network has the skills to get it done.

Start your quest today and find the perfect candidate for your needs.

On the resource necessary info about ways of becoming a digital intruder.

Facts are conveyed in a precise and comprehensible manner.

You may acquire numerous approaches for entering systems.

Furthermore, there are real-life cases that exhibit how to employ these aptitudes.

how to become a hacker

Total knowledge is periodically modified to be in sync with the latest trends in cybersecurity.

Extra care is devoted to real-world use of the mastered abilities.

Remember that any undertaking should be applied lawfully and through ethical means only.

Here are presented exclusive promocodes for 1x Bet.

The promo codes help to get additional rewards when betting on the service.

All existing discount vouchers are constantly refreshed to guarantee they work.

When using these promotions one can boost your potential winnings on the online service.

https://sutango.com/wp-content/pgs/kak_poluchity_besplatnuyu_medpomoschy_vo_vremya_beremennosti_i_rodov.html

Plus, detailed instructions on how to apply discounts are offered for maximum efficiency.

Consider that specific offers may have specific terms, so examine rules before redeeming.

Here you can obtain unique promo codes for a renowned betting brand.

The assortment of bonus opportunities is persistently enhanced to provide that you always have reach to the latest offers.

Through these promotional deals, you can economize considerably on your betting endeavors and increase your chances of gaining an edge.

All promo codes are accurately validated for legitimacy and efficiency before being published.

https://aadesignoffice.com/pages/kak_vyglyadit_sovremennyy_kuhonnyy_processor_ne_prosto_blender.html

Plus, we present thorough explanations on how to implement each profitable opportunity to improve your bonuses.

Note that some bargains may have definite prerequisites or predetermined timeframes, so it’s vital to study closely all the facts before applying them.

Welcome to our platform, where you can discover premium materials created specifically for grown-ups.

Our library available here is suitable for individuals who are 18 years old or above.

Ensure that you meet the age requirement before exploring further.

big cock

Enjoy a one-of-a-kind selection of age-restricted materials, and immerse yourself today!

Our platform makes available many types of medical products for home delivery.

Anyone can easily get treatments from your device.

Our catalog includes popular medications and specialty items.

All products is supplied through licensed suppliers.

vibramycin for dogs

We prioritize customer safety, with data protection and timely service.

Whether you’re managing a chronic condition, you’ll find affordable choices here.

Visit the store today and enjoy convenient access to medicine.

One X Bet represents a top-tier sports betting provider.

Offering a wide range of sports, 1XBet caters to a vast audience worldwide.

The 1XBet application crafted intended for Android as well as iOS users.

https://samatravel.in/pages/vred_diet_esty_li_on.html

Players are able to install the mobile version from the platform’s page or Google Play Store for Android.

iPhone customers, the app can be installed via Apple’s store without hassle.

Our platform offers a large selection of pharmaceuticals for easy access.

You can securely get health products from your device.

Our catalog includes standard treatments and custom orders.

All products is sourced from verified providers.

kamagra reviews

We maintain quality and care, with encrypted transactions and fast shipping.

Whether you’re looking for daily supplements, you’ll find safe products here.

Visit the store today and get trusted support.

1xBet Bonus Code – Vip Bonus as much as 130 Euros

Use the 1xBet promo code: 1xbro200 when registering via the application to unlock special perks offered by One X Bet for a $130 up to a full hundred percent, for placing bets along with a casino bonus including free spin package. Open the app then continue through the sign-up steps.

The One X Bet promo code: 1XBRO200 offers an amazing welcome bonus to new players — full one hundred percent up to €130 upon registration. Bonus codes serve as the key to obtaining extra benefits, and 1XBet’s bonus codes are the same. When applying this code, users have the chance from multiple deals in various phases within their betting activity. Though you don’t qualify for the welcome bonus, One X Bet India guarantees its devoted players receive gifts via ongoing deals. Check the Promotions section on their website frequently to stay updated about current deals designed for current users.

promo code for 1xbet india today

What 1XBet promotional code is currently active today?

The promotional code applicable to 1xBet equals 1XBRO200, which allows novice players joining the betting service to access an offer of €130. In order to unlock exclusive bonuses related to games and wagering, kindly enter the promotional code related to 1XBET in the registration form. To take advantage of such a promotion, prospective users need to type the promo code Code 1xbet while signing up process so they can obtain a 100% bonus on their initial deposit.

Within this platform, you can discover a wide selection adult videos.

Every video is carefully curated guaranteeing maximum satisfaction for users.

In need of certain themes or casually exploring, this resource provides material tailored to preferences.

bbc videos

New videos constantly refreshed, so that the library up-to-date.

Access to every piece of content protected to users of legal age, maintaining standards with applicable laws.

Stay tuned for new releases, as the platform adds more content daily.

1xBet Bonus Code – Exclusive Bonus as much as 130 Euros

Apply the 1xBet promo code: 1xbro200 while signing up in the App to avail exclusive rewards given by One X Bet to receive €130 maximum of 100%, for placing bets plus a $1950 with one hundred fifty free spins. Start the app and proceed through the sign-up process.

The 1xBet promo code: 1xbro200 gives a fantastic starter bonus for first-time users — 100% maximum of 130 Euros once you register. Promo codes are the key for accessing bonuses, plus 1xBet’s promotional codes are the same. By using such a code, bettors have the chance from multiple deals at different stages of their betting experience. Though you don’t qualify for the initial offer, One X Bet India ensures its loyal users get compensated through regular bonuses. Look at the Deals tab via their platform regularly to stay updated about current deals tailored for existing players.

https://wiki.addmyurls.com/profile.php?user=alicia-ziesemer-143184&do=profile

What 1XBet bonus code is now valid today?

The promotional code applicable to 1XBet is 1XBRO200, permitting new customers joining the gambling provider to access an offer amounting to €130. In order to unlock unique offers related to games and wagering, make sure to type our bonus code related to 1XBET during the sign-up process. In order to benefit of this offer, potential customers must input the promo code 1XBET at the time of registering procedure to receive a 100% bonus applied to the opening contribution.

На этом сайте вы можете найти актуальные промокоды для Melbet.

Примените коды при регистрации в системе для получения до 100% при стартовом взносе.

Плюс ко всему, можно найти бонусы в рамках действующих программ и постоянных игроков.

промокод melbet при регистрации

Проверяйте регулярно в рубрике акций, чтобы не упустить выгодные предложения от Melbet.

Каждый бонус обновляется на работоспособность, и обеспечивает безопасность во время активации.

1xBet Promo Code – Exclusive Bonus maximum of 130 Euros

Use the One X Bet promotional code: 1xbro200 while signing up via the application to access the benefits given by 1xBet for a 130 Euros up to a full hundred percent, for sports betting plus a $1950 with one hundred fifty free spins. Launch the app and proceed with the registration process.

The 1XBet promotional code: Code 1XBRO200 provides a fantastic starter bonus for first-time users — 100% up to $130 during sign-up. Promotional codes act as the key to unlocking bonuses, plus 1xBet’s bonus codes are no exception. When applying such a code, bettors can take advantage from multiple deals in various phases in their gaming adventure. Though you don’t qualify to the starter reward, One X Bet India ensures its loyal users receive gifts with frequent promotions. Look at the Deals tab via their platform regularly to stay updated regarding recent promotions designed for existing players.

1xbet promo code

What 1xBet promotional code is now valid today?

The promo code applicable to One X Bet equals Code 1XBRO200, which allows new customers registering with the betting service to unlock a bonus amounting to €130. To access exclusive bonuses pertaining to gaming and sports betting, kindly enter the promotional code for 1XBET while filling out the form. To take advantage of such a promotion, prospective users must input the bonus code Code 1xbet at the time of registering process for getting double their deposit amount applied to the opening contribution.

On this site, you can easily find interactive video sessions.

Interested in friendly chats business discussions, the site offers a solution tailored to you.

Live communication module developed for bringing users together globally.

With high-quality video along with sharp sound, every conversation becomes engaging.

You can join public rooms initiate one-on-one conversations, according to what suits you best.

https://rt.webcamsex18.ru/

The only thing needed a reliable network plus any compatible tool begin chatting.

On this site, explore a wide range of online casinos.

Interested in traditional options or modern slots, there’s something for every player.

All featured casinos checked thoroughly for trustworthiness, so you can play with confidence.

pin-up

What’s more, the site provides special rewards along with offers targeted at first-timers as well as regulars.

Due to simple access, discovering a suitable site takes just moments, enhancing your experience.

Be in the know on recent updates with frequent visits, since new casinos are added regularly.

Здесь доступны интерактивные видео сессии.

Вам нужны увлекательные диалоги переговоры, на платформе представлены решения для каждого.

Этот инструмент создана чтобы объединить пользователей со всего мира.

порно онлайн чат

Благодаря HD-качеству и превосходным звуком, любое общение становится увлекательным.

Войти в общий чат общаться один на один, в зависимости от ваших предпочтений.

Все, что требуется — хорошая связь плюс подходящий гаджет, чтобы начать.

On this platform, you can find a great variety of online slots from famous studios.

Users can try out traditional machines as well as new-generation slots with stunning graphics and interactive gameplay.

Even if you’re new or a seasoned gamer, there’s a game that fits your style.

casino

The games are available round the clock and optimized for desktop computers and mobile devices alike.

All games run in your browser, so you can jump into the action right away.

Site navigation is user-friendly, making it simple to find your favorite slot.

Join the fun, and dive into the excitement of spinning reels!

On this site, find a wide range of online casinos.

Interested in traditional options new slot machines, you’ll find an option to suit all preferences.

The listed platforms checked thoroughly for trustworthiness, so you can play with confidence.

1xbet

Additionally, this resource offers exclusive bonuses along with offers targeted at first-timers including long-term users.

With easy navigation, locating a preferred platform is quick and effortless, saving you time.

Be in the know on recent updates by visiting frequently, as fresh options appear consistently.

This website, you can access a wide selection of online slots from famous studios.

Visitors can try out traditional machines as well as feature-packed games with high-quality visuals and bonus rounds.

If you’re just starting out or an experienced player, there’s a game that fits your style.

casino slots

The games are available anytime and compatible with PCs and smartphones alike.

You don’t need to install anything, so you can jump into the action right away.

Site navigation is intuitive, making it simple to browse the collection.

Sign up today, and enjoy the world of online slots!

The Aviator Game blends air travel with exciting rewards.

Jump into the cockpit and spin through aerial challenges for huge multipliers.

With its vintage-inspired graphics, the game captures the spirit of early aviation.

https://www.linkedin.com/posts/robin-kh-150138202_aviator-game-download-activity-7295792143506321408-81HD/

Watch as the plane takes off – withdraw before it flies away to lock in your rewards.

Featuring instant gameplay and realistic background music, it’s a top choice for casual players.

Whether you’re looking for fun, Aviator delivers endless thrills with every round.

这个网站 提供 海量的 成人内容,满足 不同用户 的 喜好。

无论您喜欢 什么样的 的 视频,这里都 种类齐全。

所有 内容 都经过 专业整理,确保 高品质 的 观看体验。

黄色书刊

我们支持 各种终端 访问,包括 电脑,随时随地 自由浏览。

加入我们,探索 激情时刻 的 成人世界。

The Aviator Game merges exploration with high stakes.

Jump into the cockpit and try your luck through aerial challenges for huge multipliers.

With its classic-inspired graphics, the game reflects the spirit of early aviation.

https://www.linkedin.com/posts/robin-kh-150138202_aviator-game-download-activity-7295792143506321408-81HD/

Watch as the plane takes off – claim before it disappears to lock in your rewards.

Featuring seamless gameplay and realistic audio design, it’s a top choice for slot enthusiasts.

Whether you’re testing luck, Aviator delivers endless action with every round.

This flight-themed slot blends adventure with exciting rewards.

Jump into the cockpit and try your luck through turbulent skies for huge multipliers.

With its classic-inspired visuals, the game evokes the spirit of pioneering pilots.

aviator game download

Watch as the plane takes off – withdraw before it flies away to lock in your earnings.

Featuring seamless gameplay and immersive audio design, it’s a favorite for gambling fans.

Whether you’re testing luck, Aviator delivers non-stop excitement with every spin.

Aviator combines exploration with exciting rewards.

Jump into the cockpit and play through turbulent skies for sky-high prizes.

With its vintage-inspired design, the game evokes the spirit of aircraft legends.

https://www.linkedin.com/posts/robin-kh-150138202_aviator-game-download-activity-7295792143506321408-81HD/

Watch as the plane takes off – withdraw before it flies away to lock in your winnings.

Featuring smooth gameplay and immersive audio design, it’s a top choice for slot enthusiasts.

Whether you’re chasing wins, Aviator delivers endless action with every round.

On this site, you can discover a wide range virtual gambling platforms.

Whether you’re looking for classic games latest releases, there’s a choice for every player.

Every casino included are verified for safety, enabling gamers to bet with confidence.

casino

Additionally, the platform offers exclusive bonuses along with offers for new players and loyal customers.

Due to simple access, discovering a suitable site takes just moments, making it convenient.

Stay updated about the latest additions with frequent visits, since new casinos appear consistently.

On this site, explore an extensive selection internet-based casino sites.

Interested in classic games or modern slots, there’s a choice to suit all preferences.

Every casino included fully reviewed for trustworthiness, so you can play securely.

1xbet

Moreover, this resource unique promotions plus incentives targeted at first-timers and loyal customers.

Due to simple access, finding your favorite casino is quick and effortless, making it convenient.

Keep informed regarding new entries by visiting frequently, as fresh options come on board often.

В этом месте доступны эротические материалы.

Контент подходит тем, кто старше 18.

У нас собраны широкий выбор контента.

Платформа предлагает качественный контент.

купить Гидроморфинол

Вход разрешен только для совершеннолетних.

Наслаждайтесь безопасным просмотром.

Свадебные и вечерние платья 2025 года задают новые стандарты.

Популярны пышные модели до колен из полупрозрачных тканей.

Блестящие ткани создают эффект жидкого металла.

Многослойные юбки определяют современные тренды.

Разрезы на юбках создают баланс между строгостью и игрой.

Ищите вдохновение в новых коллекциях — стиль и качество сделают ваш образ идеальным!

http://mtw2014.tmweb.ru/forum/?PAGE_NAME=message&FID=1&TID=11953&TITLE_SEO=11953-programma-dlya-prosmotra-kamer-videonablyudeniya&MID=658471&result=reply#message658471

У нас вы можете найти вспомогательные материалы для школьников.

Предоставляем материалы по всем основным предметам с учетом современных требований.

Готовьтесь к ЕГЭ и ОГЭ с помощью тренажеров.

https://bulgarizdat.ru/publikaczii/gdz-effektivnoe-ispolzovanie-gotovyh-domashnih-zadanij-dlya-uluchsheniya-uspevaemosti/

Примеры решений упростят процесс обучения.

Регистрация не требуется для комфортного использования.

Используйте ресурсы дома и успешно сдавайте экзамены.

цвет с доставкой недорого доставка цветов онлайн

Свежие актуальные Новости хоккея со всего мира. Результаты матчей, интервью, аналитика, расписание игр и обзоры соревнований. Будьте в курсе главных событий каждый день!

Микрозаймы онлайн https://kskredit.ru на карту — быстрое оформление, без справок и поручителей. Получите деньги за 5 минут, круглосуточно и без отказа. Доступны займы с любой кредитной историей.

Хочешь больше денег https://mfokapital.ru Изучай инвестиции, учись зарабатывать, управляй финансами, торгуй на Форекс и используй магию денег. Рабочие схемы, ритуалы, лайфхаки и инструкции — путь к финансовой независимости начинается здесь!

Быстрые микрозаймы https://clover-finance.ru без отказа — деньги онлайн за 5 минут. Минимум документов, максимум удобства. Получите займ с любой кредитной историей.

Сделай сам как сделать ремонта плитка Ремонт квартиры и дома своими руками: стены, пол, потолок, сантехника, электрика и отделка. Всё, что нужно — в одном месте: от выбора материалов до финального штриха. Экономьте с умом!

Трендовые фасоны сезона нынешнего года отличаются разнообразием.

Актуальны кружевные рукава и корсеты из полупрозрачных тканей.

Металлические оттенки делают платье запоминающимся.

Греческий стиль с драпировкой определяют современные тренды.

Минималистичные силуэты подчеркивают элегантность.

Ищите вдохновение в новых коллекциях — детали и фактуры оставят в памяти гостей!

https://wiki.hightgames.ru/index.php/%D0%9E%D0%B1%D1%81%D1%83%D0%B6%D0%B4%D0%B5%D0%BD%D0%B8%D0%B5_%D1%83%D1%87%D0%B0%D1%81%D1%82%D0%BD%D0%B8%D0%BA%D0%B0:46.8.210.172#.D0.90.D0.BA.D1.82.D1.83.D0.B0.D0.BB.D1.8C.D0.BD.D1.8B.D0.B5_.D1.81.D0.B2.D0.B0.D0.B4.D0.B5.D0.B1.D0.BD.D1.8B.D0.B5_.D0.BE.D0.B1.D1.80.D0.B0.D0.B7.D1.8B_2025_.E2.80.94_.D0.BA.D0.B0.D0.BA_.D0.B2.D1.8B.D0.B1.D1.80.D0.B0.D1.82.D1.8C.3F

КПК «Доверие» https://bankingsmp.ru надежный кредитно-потребительский кооператив. Выгодные сбережения и доступные займы для пайщиков. Прозрачные условия, высокая доходность, финансовая стабильность и юридическая безопасность.

Ваш финансовый гид https://kreditandbanks.ru — подбираем лучшие предложения по кредитам, займам и банковским продуктам. Рейтинг МФО, советы по улучшению КИ, юридическая информация и онлайн-сервисы.

Займы под залог https://srochnyye-zaymy.ru недвижимости — быстрые деньги на любые цели. Оформление от 1 дня, без справок и поручителей. Одобрение до 90%, выгодные условия, честные проценты. Квартира или дом остаются в вашей собственности.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Профессиональный массаж Ивантеевка: классический, лечебный, расслабляющий, антицеллюлитный. Квалифицированные массажисты, индивидуальный подход, комфортная обстановка. Запишитесь на сеанс уже сегодня!

party balloons dubai deliver balloons dubai

resume environmental engineer cv engineer resume

The wild symbol at the slot is the golden coin and will substitute for any other symbols at the game apart from the Leprechaun symbol and the pot of gold symbol. Our services facilitated by this website are operated by Broadway Gaming Ireland DF Limited, a company incorporated in the Republic of Ireland. A. To start playing real money slot games, register an account at Amazon Slots Casino make your first deposit, and browse our extensive collection of real money slots to find your favourite games. Home » Slots » iSoftBet » Lucky Leprechaun New players only. Min dep £10 with code WINK100. 100% games bonus (max £100). 30x games bonus wagering required. 5-day expiry. 18+ GambleAware.org. T&Cs apply. The second feature is the bonus round which is activated by landing the pot of gold scatter symbol. You’ll now be taken to a screen showing the Leprechaun stood next to 3 pots, each representing a different Jackpot amount. There are bronze, silver and gold jackpots and you’ll hope that the rainbow ends at the pot of gold for the biggest prize in the game. The Jackpot feature is only enacted if playing for the max bet size of 5.

https://medjutim.dnk.org.rs/aviator-official-game-3-things-to-check-first/

Designed to take your virtual gaming experience to a new level, Paddy Power Games is home to more than 1100 of the best online slots, showcasing an excellent library of online casino table games and dedicated live dealers. If you are one of Paddy Power’s new poker players, deposit £5 upon sign up and you get £20 in bonuses, plus your deposit will be matched 100% up to £200. This is undoubtedly one of the best poker sign up bonuses available at the moment. Paddy Power™ Games is one of the sections found at Paddy Power Casino, operated by the world-renowned Irish bookmakers of the same name. With hundreds of online slots and jackpot options, as well as roulette, poker, blackjack, and even a live casino section, it’s safe to say that this platform is a veritable treasure trove of online casino games.

Подходящи за офиса рокли в класически силуети и неутрални тонове

стилни дамски рокли http://www.rokli-damski.com .

Долговечность и энергоэффективность при строительстве деревянных домов

построить деревянный дом под ключ https://www.stroitelstvo-derevyannyh-domov178.ru .

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Услуги массажа Ивантеевка — здоровье, отдых и красота. Лечебный, баночный, лимфодренажный, расслабляющий и косметический массаж. Сертифицированнй мастер, удобное расположение, результат с первого раза.

Дамски комплекти в пастелни и неутрални тонове за универсално съчетание

дамски комплекти на промоция http://komplekti-za-jheni.com/ .

This iconic Audemars Piguet Royal Oak model combines luxury steel craftsmanship introduced in 2012 within the brand’s prestigious lineup.

Crafted in 41mm stainless steel boasts an octagonal bezel secured with eight visible screws, embodying the collection’s iconic DNA.

Equipped with the Cal. 3120 automatic mechanism, it ensures precise timekeeping with a date display at 3 o’clock.

Audemars 15400ST

The dial showcases a black Grande Tapisserie pattern highlighted by luminous appliqués for clear visibility.

A seamless steel link bracelet ensures comfort and durability, secured by a hidden clasp.

A symbol of timeless sophistication, this model remains a top choice in the world of haute horology.

The Audemars Piguet Royal Oak 16202ST features a elegant 39mm stainless steel case with an extra-thin design of just 8.1mm thickness, housing the latest selfwinding Calibre 7121. Its mesmerizing smoked blue gradient dial showcases a intricate galvanic textured finish, fading from a radiant center to dark periphery for a captivating aesthetic. The iconic eight-screw octagonal bezel pays homage to the original 1972 design, while the scratch-resistant sapphire glass ensures optimal legibility.

https://telegra.ph/Audemars-Piguet-Royal-Oak-16202ST-When-Steel-Became-Noble-06-02

Water-resistant to 50 meters, this “Jumbo” model balances sporty durability with luxurious refinement, paired with a steel link strap and reliable folding buckle. A contemporary celebration of classic design, the 16202ST embodies Audemars Piguet’s innovation through its meticulous mechanics and evergreen Royal Oak DNA.

Всё о городе городской портал города Ханты-Мансийск: свежие новости, события, справочник, расписания, культура, спорт, вакансии и объявления на одном городском портале.

Стилна визия и функционалност в най-новите спортни екипи

дамски спортни комплекти http://sportni-komplekti.com/ .

Моделите дамски тениски, които никога не излизат от мода

интересни дамски тениски https://teniski-damski.com/ .

Здесь доступен мессенджер-бот “Глаз Бога”, который проверить всю информацию о гражданине через открытые базы.

Инструмент функционирует по фото, обрабатывая доступные данные в сети. Через бота доступны бесплатный поиск и полный отчет по запросу.

Инструмент актуален на август 2024 и охватывает фото и видео. Бот гарантирует узнать данные в открытых базах и предоставит сведения за секунды.

Глаз Бога glazboga.net

Это сервис — помощник при поиске персон удаленно.

Стилни блузи с акцент върху талията и женствените форми

елегантни дамски блузи с къс ръкав http://www.bluzi-damski.com/ .

¿Quieres códigos promocionales vigentes de 1xBet? Aquí encontrarás recompensas especiales en apuestas deportivas .

El código 1x_12121 garantiza a hasta 6500₽ durante el registro .

Para completar, canjea 1XRUN200 y disfruta una oferta exclusiva de €1500 + 150 giros gratis.

https://kylerwhra96318.ttblogs.com/15093132/descubre-cómo-usar-el-código-promocional-1xbet-para-apostar-free-of-charge-en-argentina-méxico-chile-y-más

Mantente atento las novedades para conseguir más beneficios .

Todos los códigos son verificados para hoy .

¡Aprovecha y potencia tus apuestas con esta plataforma confiable!

В этом ресурсе вы можете получить доступ к боту “Глаз Бога” , который способен собрать всю информацию о любом человеке из открытых источников .

Данный сервис осуществляет проверку ФИО и раскрывает данные из онлайн-платформ.

С его помощью можно пробить данные через специализированную платформу, используя фотографию в качестве ключевого параметра.

probiv-bot.pro

Система “Глаз Бога” автоматически анализирует информацию из проверенных ресурсов, формируя структурированные данные .

Пользователи бота получают ограниченное тестирование для ознакомления с функционалом .

Сервис постоянно совершенствуется , сохраняя скорость обработки в соответствии с законодательством РФ.

Найти мастера по ремонту холодильников в Киеве можно на сайте

Строительство деревянных домов с акцентом на энергоэффективность и уют

дома деревянные под ключ https://stroitelstvo-derevyannyh-domov78.ru/ .

Уникальные маршруты и виды с борта арендованной яхты

аренда яхт в сочи https://arenda-yahty-sochi323.ru .

resume software engineer ats engineering resumes examples

Мир полон тайн https://phenoma.ru читайте статьи о малоизученных феноменах, которые ставят науку в тупик. Аномальные явления, редкие болезни, загадки космоса и сознания. Доступно, интересно, с научным подходом.

Читайте о необычном http://phenoma.ru научно-популярные статьи о феноменах, которые до сих пор не имеют однозначных объяснений. Психология, физика, биология, космос — самые интересные загадки в одном разделе.

Профессиональная уборка после пожара, залива и ЧС

заказать клининг https://kliningovaya-kompaniya0.ru/ .

Шины всех типоразмеров в наличии в крупном онлайн-магазине

магазин шин и дисков https://www.kupit-shiny0-spb.ru .

Комплексная услуга: замер, производство, доставка и монтаж стеклянных душевых ограждений

п образные душевые стекла https://www.steklo777777.ru .

раздача стим аккаунтов бесплатно https://t.me/s/Burger_Game

resume for engineering jobs resume software engineer ats

стим аккаунт бесплатно без игр http://t.me/Burger_Game

Looking for exclusive 1xBet promo codes? This site offers verified bonus codes like 1x_12121 for registrations in 2024. Claim up to 32,500 RUB as a first deposit reward.

Use official promo codes during registration to maximize your rewards. Enjoy risk-free bets and exclusive deals tailored for sports betting.

Find monthly updated codes for global users with fast withdrawals.

Every promotional code is checked for validity.

Don’t miss limited-time offers like GIFT25 to increase winnings.

Active for first-time deposits only.

https://socialistener.com/story5150316/unlocking-1xbet-promo-codes-for-enhanced-betting-in-multiple-countriesStay ahead with 1xBet’s best promotions – apply codes like 1x_12121 at checkout.

Experience smooth rewards with instant activation.

Заказывайте сувениры с логотипом — работаем по всей России

изготовление сувенирной продукции с логотипом https://suvenirnaya-produktsiya-s-logotipom-1.ru .

Научно-популярный сайт https://phenoma.ru — малоизвестные факты, редкие феномены, тайны природы и сознания. Гипотезы, наблюдения и исследования — всё, что будоражит воображение и вдохновляет на поиски ответов.

resume as a engineer chief engineer cv example

Подбор оптимального лизинга по параметрам с помощью автоматизированного маркетплейса

маркетплейс лизинга https://lizingovyy-agregator.ru .

Offline Mode: Ready to take a break from online connectivity? Aviator APK offers an offline mode where you can practice your skills or simply enjoy the game without an internet connection. Entdecken Sie die Faszination von Plinko: Das beliebteste Spiel der Glücksritter! Die Geschichte von Plinko Wie Plinko gespielt wird Strategien für erfolgreiches Plinko-Spiel Die Psychologie des Spiels Online-Plinko: Die digitale Version des Erfolgs Die Zukunft von Plinko Fazit Entdecken Sie die Faszination von Plinko: Das beliebteste Spiel der Glücksritter! Willkommen in der Welt von Plinko, Do stabilnej pracy apk Aviator wymaga dobrego połączenia internetowego. Regularnie pobieraj i aktualizuj aplikację gry, aby uzyskać dostęp do nowych funkcji i ulepszeń bezpieczeństwa. Statystyki pokazują, że 7,8% graczy preferuje mobilną wersję Aviatora ze względu na wygodę i dostępność.

https://fitwell.qa/aviator-betway-login-jak-rozpoczac-gre-krok-po-kroku/

Book of Ra Deluxe App Name: IZIBET Bet TrackerPackage Name: com.izibet.retailmobileapp.androidCategory: Sports BettingVersion: Size: Last Updated: Developer: IZIBET Explore the world of sports betting with confidence—download the IZIBET Bet Tracker APK today and take the first step towards an organized and informed betting experience! Explore the world of sports betting with confidence—download the IZIBET Bet Tracker APK today and take the first step towards an organized and informed betting experience! Explore the world of sports betting with confidence—download the IZIBET Bet Tracker APK today and take the first step towards an organized and informed betting experience! Posiada cenne 4 letnie doświadczenie w branży. Ula zaczynała jako kierownik serwisu, a na samym początku pracowała jako niezależna pisarka. Na ten moment pracuje jako content manager, sprawując pieczę nad treścią i zawartością naszego portalu. To ona jest odpowiedzialna za sprawdzanie reputacji kasyna i wszelkie rekomendacje, jeśli chodzi o gry i platformy hazardowe.

Научно-популярный сайт https://phenoma.ru — малоизвестные факты, редкие феномены, тайны природы и сознания. Гипотезы, наблюдения и исследования — всё, что будоражит воображение и вдохновляет на поиски ответов.

Премиум-отдых на воде: аренда яхты с индивидуальным сервисом

яхты сочи яхты сочи .

В этом ресурсе вы можете найти боту “Глаз Бога” , который способен получить всю информацию о любом человеке из открытых источников .

Этот мощный инструмент осуществляет проверку ФИО и предоставляет детали из государственных реестров .

С его помощью можно пробить данные через специализированную платформу, используя фотографию в качестве начальных данных .

пробить авто по номеру

Технология “Глаз Бога” автоматически обрабатывает информацию из открытых баз , формируя структурированные данные .

Клиенты бота получают ограниченное тестирование для тестирования возможностей .

Платформа постоянно развивается, сохраняя высокую точность в соответствии с требованиями времени .

Сертификация и лицензии — ключевой аспект ведения бизнеса в России, обеспечивающий защиту от неквалифицированных кадров.

Декларирование продукции требуется для подтверждения безопасности товаров.

Для торговли, логистики, финансов необходимо специальных разрешений.

https://ok.ru/group/70000034956977/topic/158840759957681

Игнорирование требований ведут к приостановке деятельности.

Добровольная сертификация помогает повысить доверие бизнеса.

Соблюдение норм — залог успешного развития компании.

Прямо здесь вы найдете сервис “Глаз Бога”, который найти всю информацию по человеку по публичным данным.

Инструмент активно ищет по ФИО, обрабатывая публичные материалы в сети. С его помощью доступны бесплатный поиск и полный отчет по запросу.

Инструмент проверен на 2025 год и охватывает фото и видео. Сервис гарантирует найти профили в соцсетях и покажет информацию за секунды.

https://glazboga.net/

Такой сервис — помощник при поиске персон онлайн.

These days, it feels like football or soccer (as it is known in the US) is everywhere! Are you enjoying the FIFA Women’s World Cup in France? What about the exciting Copa América? Are you following the Total Africa Cup of Nations? If you like the game and want to expand your Spanish vocabulary of football terms, this lesson introduces some of the most common football soccer vocabulary words in Spanish. When it came to the fourth for each country, Sergio Ramos, who had blazed high over the bar in the Champions League semi-final shootout, clipped in a la Pirlo. “It seems to be fashionable now,” Del Bosque said. “I was delighted with it.” Bruno Alves then thumped his off the underside of the bar and away. As the ball bounced free, it took Portugal’s chances of reaching the final with it.

https://headlice.co.il/review-del-juego-de-casino-online-balloon-apk-de-smartsoft-para-jugadores-en-venezuela/

1Win Lucky Jet tiene una serie de características que facilitan el juego. Puede dejar que el ordenador gestione sus apuestas y retiradas. Juego de Lucky Jet 1win – Juega Los profesionales avanzados recomiendan integrar múltiples metodologías mientras se adaptan a los patrones emergentes en Lucky Jet 1win. El análisis estadístico sugiere que los mejores resultados suelen surgir de una gestión equilibrada de riesgos combinada con un timing estratégico cuando juegas a Lucky Jet por dinero real. Aquí tienes consejos para ayudarte a ganar dinero en Lucky Jet Casino: El sitio web oficial de Lucky Bet le permite jugar juegos de choque, pero debe iniciar sesión, sin registrarse, muchas funciones de Lucky Jet no estarán disponibles. Las llamadas señales Lucky Jet o “signals” en inglés hacen referencia a la supuesta existencia de guías o apps que adivinan los resultados durante el uso del mini game. Estas apps o guías se ofertan como dispositivos externos, o desde bots en redes sociales como Tik Tok. De igual forma, se ofrecen como apps para celulares con sistema operativo Android. En cualquier caso, la promesa que hacen es la de conocer los resultados de forma anticipada en cada ronda del juego Lucky Jet México.

¿Quieres promocódigos recientes de 1xBet? En este sitio podrás obtener bonificaciones únicas para apostar .

El promocódigo 1x_12121 ofrece a hasta 6500₽ al registrarte .

También , canjea 1XRUN200 y disfruta una oferta exclusiva de €1500 + 150 giros gratis.

https://kennaharde4.wixsite.com/my-site-1

Mantente atento las promociones semanales para conseguir recompensas adicionales .

Todos los códigos están actualizados para hoy .

No esperes y potencia tus oportunidades con esta plataforma confiable!

Aliraza2024-01-07 22:56:35 App market for 100% working mods. Requires Android Shake up the Lightning multipliers! select vTitle, vContent from gen_updates where cStatus = ‘A’ and cProperty = ‘DRW’ and (‘2025-05-22 21:27:46’ between dFrom and dTo) ORDER BY iRank DESC LIMIT 1 100% working on devices Accelerated for downloading big mod files. Strategic Card Game Set in the Three Kingdoms Welcome to Dragon Tiger Predictions with GPT, this prediction tool app can analyze the input pattern and provide you predictions. +1 212-334-0212 Dragon Vs Tiger predict GPT tool offers a variety of features for supporting users such as: Was the prediction correct? Lightning Sic Bo Strategic Card Game Set in the Three Kingdoms select vTitle, vContent from gen_updates where cStatus = ‘A’ and cProperty = ‘DRW’ and (‘2025-05-22 21:28:12’ between dFrom and dTo) ORDER BY iRank DESC LIMIT 1

https://wp15-c13013-1.btsndrc.ac/balloon-game-by-smartsoft-pros-and-cons-of-linking-wallets-via-app-vs-web-for-indian-players/

Country & currency Country & currency Country & currency Country & currency Find your new pastime among our hobby kits Founded in 1985, the Volkswagen Group China has made serious tracks in keeping the Volkswagen name alive. In 2020, they established the Vertical Mobility Project to develop sustainable electrical aircrafts with the catchy tagline: ‘In China, For China’. Soft toys Flying Tiger Copenhagen Fat tiger Zhao TiezhuHe has always been “kind-hearted and fat”Galloping in the rivers and lakes Express yourself with colourful and playful designs When you choose FSC®-certified goods, you support the responsible use of the world’s forests, and you help to take care of the animals and people who live in them. Look for the FSC mark on our products and read more at flyingtiger fsc

Jarvi — корм для питомцев, которым вы можете доверять

кошачий сухой корм jarvi холистик отзывы кошачий сухой корм jarvi холистик отзывы .

Прямо здесь можно получить мессенджер-бот “Глаз Бога”, позволяющий проверить сведения о гражданине по публичным данным.

Сервис функционирует по фото, используя доступные данные в сети. С его помощью осуществляется пять пробивов и полный отчет по имени.

Сервис обновлен на 2025 год и поддерживает фото и видео. Сервис поможет узнать данные в соцсетях и предоставит сведения за секунды.

https://glazboga.net/

Данный сервис — помощник в анализе людей через Telegram.

Looking for exclusive 1xBet promo codes ? Here is your ultimate destination to discover valuable deals designed to boost your wagers.

If you’re just starting or a seasoned bettor , the available promotions guarantees exclusive advantages across all bets.

Keep an eye on seasonal campaigns to multiply your rewards.

https://www.bitsdujour.com/profiles/Tn5Rcx

All listed codes are frequently updated to guarantee reliability this month .

Don’t miss out of exclusive perks to enhance your odds of winning with 1xBet.

Full hd film kategorisinde ödüllü yapımlar sizi bekliyor

hd fil izle hdturko.com .

На данном сайте вы можете получить доступ к боту “Глаз Бога” , который может получить всю информацию о любом человеке из открытых источников .

Этот мощный инструмент осуществляет проверку ФИО и предоставляет детали из государственных реестров .

С его помощью можно проверить личность через специализированную платформу, используя имя и фамилию в качестве начальных данных .

пробив авто бесплатно

Система “Глаз Бога” автоматически собирает информацию из открытых баз , формируя подробный отчет .

Подписчики бота получают ограниченное тестирование для проверки эффективности.

Решение постоянно развивается, сохраняя актуальность данных в соответствии с законодательством РФ.

На данном сайте доступен сервис “Глаз Бога”, позволяющий проверить всю информацию о человеке из открытых источников.

Сервис работает по ФИО, обрабатывая актуальные базы в Рунете. Через бота доступны пять пробивов и полный отчет по запросу.

Сервис обновлен на август 2024 и охватывает фото и видео. Бот поможет узнать данные в открытых базах и отобразит результаты мгновенно.

https://glazboga.net/

Это бот — помощник для проверки граждан удаленно.

Гагры круглый год: отдых в любое время и по любому бюджету

гагра снять жилье otdyh-gagry.ru .

Searching for latest 1xBet promo codes? Our platform offers working promotional offers like GIFT25 for registrations in 2025. Get up to 32,500 RUB as a welcome bonus.

Use trusted promo codes during registration to boost your bonuses. Benefit from no-deposit bonuses and exclusive deals tailored for sports betting.

Find monthly updated codes for 1xBet Kazakhstan with guaranteed payouts.

Every voucher is checked for accuracy.

Don’t miss exclusive bonuses like 1x_12121 to increase winnings.

Active for first-time deposits only.

https://www.askmeclassifieds.com/user/profile/2296810Stay ahead with top bonuses – enter codes like 1XRUN200 at checkout.

Enjoy seamless rewards with instant activation.

¿Quieres cupones vigentes de 1xBet? En este sitio descubrirás recompensas especiales para tus jugadas.

El promocódigo 1x_12121 ofrece a un bono de 6500 rublos durante el registro .

Para completar, canjea 1XRUN200 y obtén un bono máximo de 32500 rublos .

https://hallbook.com.br/blogs/599951/Code-promo-1XBET-pour-bonus-VIP-de-130

Mantente atento las novedades para acumular más beneficios .

Las ofertas disponibles están actualizados para 2025 .

¡Aprovecha y maximiza tus apuestas con esta plataforma confiable!

Здесь доступен мощный бот “Глаз Бога” , который получает данные о любом человеке из общедоступных ресурсов .

Инструмент позволяет пробить данные по ФИО , раскрывая информацию из онлайн-платформ.

https://glazboga.net/

Kaliteden ödün vermeyenler için özel seçilmiş full hd film yapımları

4 k filim izle https://www.filmizlehd.co/ .