Introduction: Generative AI has ushered in transformative possibilities, but alongside its advancements lie the spectre of AI hallucinations. These hallucinations occur when the AI lacks sufficient context, leading to unreal or incorrect outputs. The consequences can be dire, ranging from misinformation to life-threatening situations.

Research Insights: A recent study evaluating large language models (LLMs) revealed alarming statistics: nearly half of generated texts contained factual errors, while over half exhibited discourse flaws. Logical fallacies and the generation of personally identifiable information (PII) further compounded the issue. Such findings underscore the urgent need to address AI hallucinations.

Initiatives for Addressing Hallucinations: In response to the pressing need for reliability, initiatives like Hugging Face’s Hallucination Leader board have emerged. By ranking LLMs based on their level of hallucinations, these platforms aim to guide researchers and engineers toward more trustworthy models. Moreover, approaches such as mitigation and detection are crucial in tackling hallucinations.

Mitigation Strategies: Mitigation strategies focus on minimizing the occurrence of hallucinations. Techniques include prompt engineering, Retrieval-Augmented Generation (RAG), and architectural improvements. For example, RAG offers enhanced data privacy and reduced hallucination levels, demonstrating promising avenues for improvement.

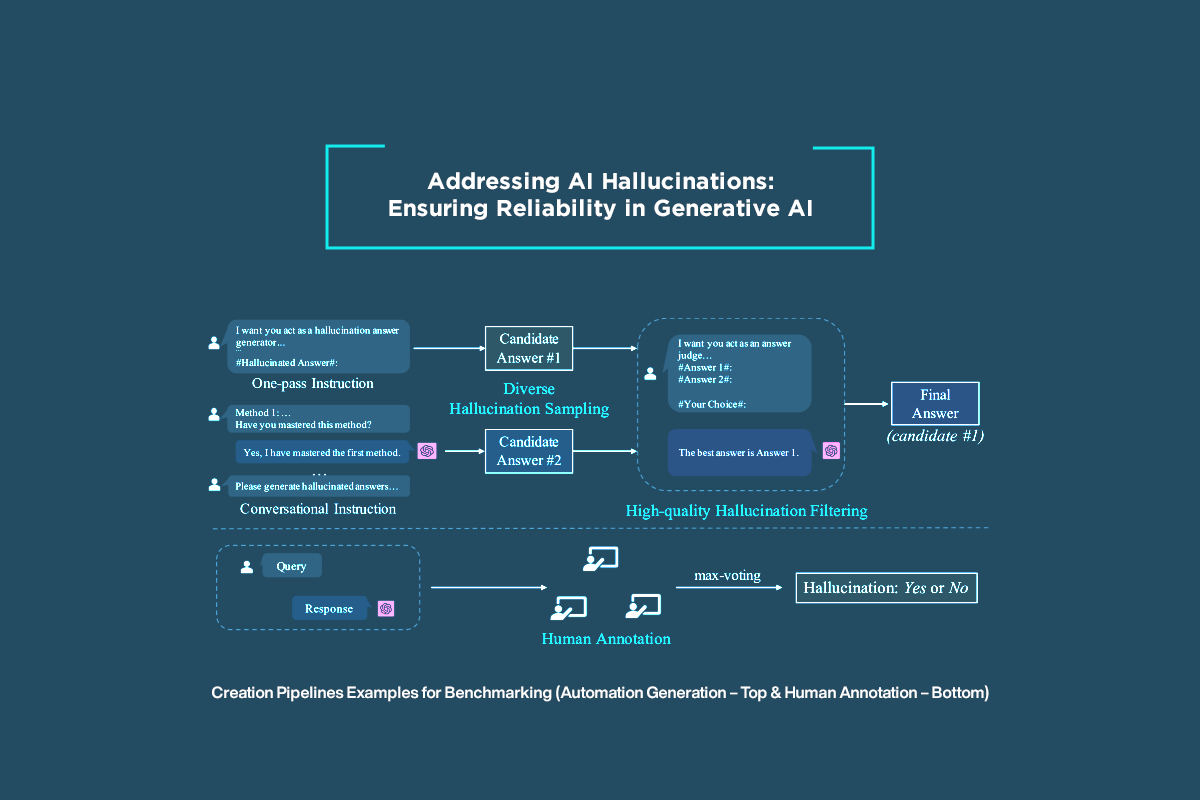

Detection Methods: Detecting hallucinations is equally vital for ensuring AI reliability. Benchmark datasets like HADES and tools like Neural PathHunter (NPH) enable the identification and refinement of hallucinations. HaluEval, a benchmark for evaluating LLM performance, provides valuable insights into the efficacy of detection methods.

Implications and Future Directions: Addressing AI hallucinations is not merely a technical challenge but a critical step toward fostering trust in AI applications. As businesses increasingly rely on AI for decision-making, the need for reliable, error-free outputs becomes paramount. Ongoing research and development efforts are essential to advance the field and uphold AI responsibility.

Conclusion: In the quest for AI innovation, addressing hallucinations is a fundamental imperative. By implementing mitigation and detection strategies and leveraging emerging technologies, we can pave the way for more reliable and trustworthy AI systems. Ultimately, this journey toward AI reliability holds the key to unlocking the full potential of Generative AI for the benefit of businesses and society.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Your article helped me a lot, is there any more related content? Thanks!

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you, your article surprised me, there is such an excellent point of view. Thank you for sharing, I learned a lot.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Thank you, your article surprised me, there is such an excellent point of view. Thank you for sharing, I learned a lot. https://www.binance.com/vi/register?ref=T7KCZASX

Thank you, your article surprised me, there is such an excellent point of view. Thank you for sharing, I learned a lot.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Pingback: AI Coding Tools Hallucination Is Out of Control — Are We Building Software on Lies? - openaijournal

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Hey all!

Found a helpful guide about Bitcoin & Ethereum.

It highlights crypto strategies in 2025.

You might like it if you’re into NFTs.

[url=https://monespace.org/monespace/2025/05/20/getting-started-arbitrox-help-heart/]More info[/url]

Hello!

buy backlink [url=https://offer-noones.com/]buys[/url]

Good luck 🙂

Hello!

buy backlink [url=https://offer-noones.com/]seo venda bot[/url]

Good luck 🙂

Hello!

buy backlink [url=https://offer-noones.com/]globo sexo[/url]

Good luck 🙂

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Your writing style is engaging. 👉 Watch Live Tv online in HD. Stream breaking news, sports, and top shows anytime, anywhere with fast and reliable live streaming.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://www.binance.info/fr/register-person?ref=GJY4VW8W

I am really loving the theme/design of your blog.

Do you ever run into any web browser compatibility issues?

A number of my blog readers have complained about

my blog not working correctly in Explorer but looks great in Chrome.

My web site; BAYAR4D

https://letterboxd.com/codeprom21o/